If you have not yet read my previous article on my first magical A/B test, you should check it out here, or else you wouldn’t know what the hell I am talking about.

If you have not yet read my previous article on my first magical A/B test, you should check it out here, or else you wouldn’t know what the hell I am talking about.

In this article, I will talk about my learnings from the experiments.

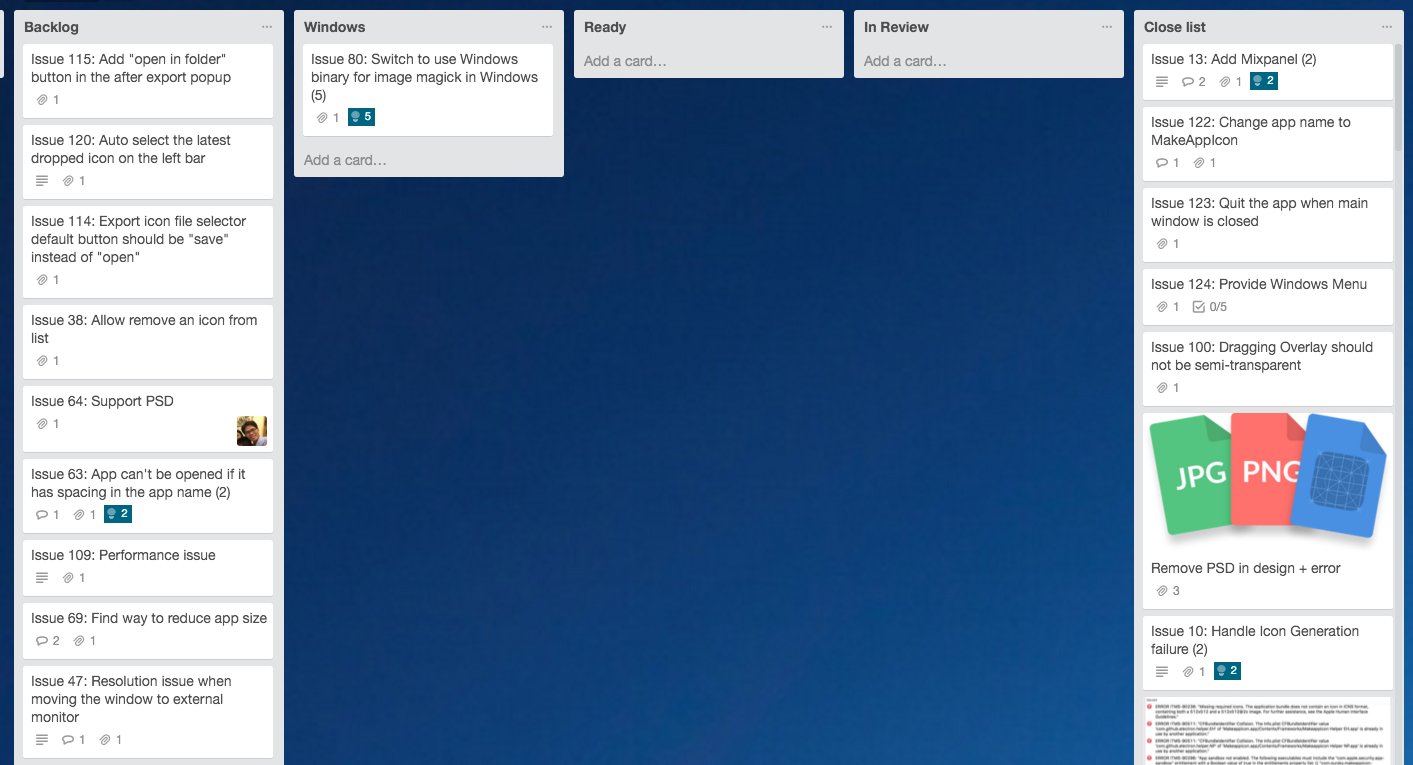

Mistake #1: I applied two challengers to the A/B test for trial 1

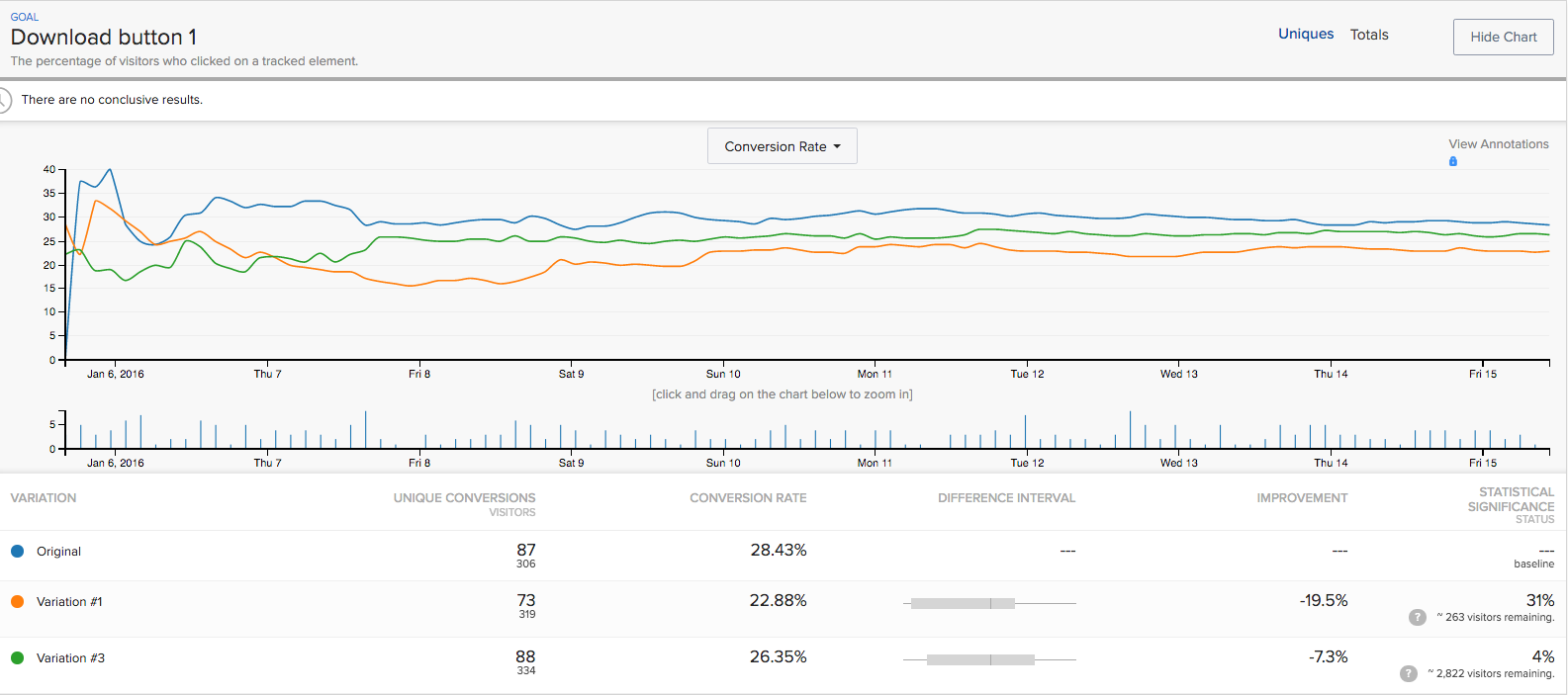

This was too greedy. For an A/B test to give effective result, we need to reach a confidence level of an internal target of 80% percent, which indicates the likelihood of the result and how confident we are to assume that one variation is better than the other; the industry standard is 95%. You can set it lower accordingly, but just remember it’ll also increase your chances of failing to compliant with your result.

This means if a test is 80% confidence, the result is 80% effective and not by randomness. To reach a certain confidence level, we need a solid sample size for each variation. Having only around 200 visitors going into the site and each variation only get 66 samples a day, would take weeks to reach the set confidence level. Significance level

You should always aim at having one challenger at a time, unless you have thousands of visitors per day.

Mistake #2: I made small changes early-on in Trial 1

The consequence of having small changes at first is the variation of conversion rate is likely to be small. The problem couples with 2 challengers means that it will require a huge sample size to be statistically confident in a test. This is because you need more people to prove that small difference in conversion is not merely out of randomness.

In an A/B test, you should always start off with big changes. Remove your seemingly promising video, changing photos to GIFs, adding a photo of a hot lady typing codes etc. These changes will point you in the right direction of getting your visitors to convert.

For instance, in my second trial, the conversion did not improve even when I provided a huge amount of information on functionality; the result indicated that visitors are not really concerned about the functionality of ShotBot.

It may seem uneasy, but don’t be afraid of making big changes; the result may surprise you. If visitors convert, follow the same path down the road to improve conversion.

Mistake #3: I did not set a hypothesis in trial 1

Hypothesis is the extra step that helps you get a clearer vision of what you are actually testing for.

The problem of my two challengers is that they basically test the same thing. The hypothesis would be something like ‘Developers need quantified benefit to convert’, which could be fine, but I certainly did not need to go through a couple of tests just to prove one hypothesis I made.

I personally think we do not have to think of a hypothesis before generating test ideas; but it is necessary to keep track of your experiments, what they’re about and what you’re trying to test/prove. This is because in the future, when others look at your test, they would understand what hypothesis you’ve made and were rejected so that they could go the other direction.

If a hypothesis is not rejected, then you may continue to test around the hypothesis to improve conversion, making relatively small changes.

Reminder #1: Set a target confidence level

Before beginning a test, you should set a target confidence level. Industry standard confidence level is around 95%, but if you are more lean like us, a range from 80% to 90% would be reasonable to carry out a test at a much faster pace, compared to 90% up.

I’ve also found a sample size calculator which helps you estimate how many visitors you need per day to reach your target significance level.

If you are into statistics, you can take a deeper look into this Post by Minitab that discusses the statistical nature of it.

Reminder #2: Set a target conversion rate

In short, know what is good enough. Don’t over test them because A/B testing is time consuming and there’s no end in it.

Reminder #3: Set a timeframe

You need to set a timeframe for each test trial and the entire test series.

For individual test, if it is taking too long to reach the significance level, it is very likely that your improvement is too minor or your significance level is too demanding.

For the entire test series, if you can’t reach your target conversion rate, the problem could be something more fundamental, such as there is not enough demand for your product. This is definitely beyond what an A/B test can fix.

Reminder #4: It requires luck.

The length of an A/B test can really be dependent on luck. I was just lucky that I hit the sweet spot early. But if you aren’t, don’t give up.

Good luck!

By the way, subscribe to us so that you don’t have to do stupid sh_t like I did.

Written by:

Dennis the intern. Doing all sorts of growth hacking, content marketing and data driven goodies at Oursky. He loves cat too.