TL;DR: Google’s open-source TensorFlow library helps developers build computational graphs and contains free additional modules that make AI/ML software development easier for consumer products and mobile apps. Developers require knowledge of Python or C++. This piece covers 3 common modules and possible business applications:

- Convolutional Neural Networks (CNN) for image recognition

- Sequence-to-Sequence (Seq2Seq) models for human language

- Large-scale linear models for data analysis

What’s in TensorFlow?

Open-sourced in 2015, TensorFlow is a framework by Google for creating deep learning models. Deep Learning is one of several categories of machine learning (ML) models that use multi-layer neural networks. The TensorFlow library allows users to perform functions by creating a computational graph.

TensorFlow took the world by storm because it is free, (relatively) easy to use and gives developers with entry-level machine learning backgrounds access a powerful library instead of building all their AI models from scratch. TensorFlow and its accompanying modules make ML/AI software development for both mobile apps and backend services easier.

How does TensorFlow fit into AI and Machine learning?

Tensorflow is one of many machine learning libraries (other examples include CNTK and Theano). Machine learning is a field of computer science that gives computers the ability to learn without being explicitly programmed.[1] For example, AlphaGo Zero‘s AI taught itself to play Go and outperformed its predecessor, AlphaGo, which had defeated the world champion in Go. Machine learning is useful for tasks where explicit algorithms don’t yield good results, such as user screening, sorting high context data and clustering for predictions and profiling.

Practical examples include detecting fraud or data breaches, email filtering, optical character recognition (OCR), and ranking.

In the next section, we’ll demystify the computational graph, which is essential for understanding how TensorFlow works in human-speak.

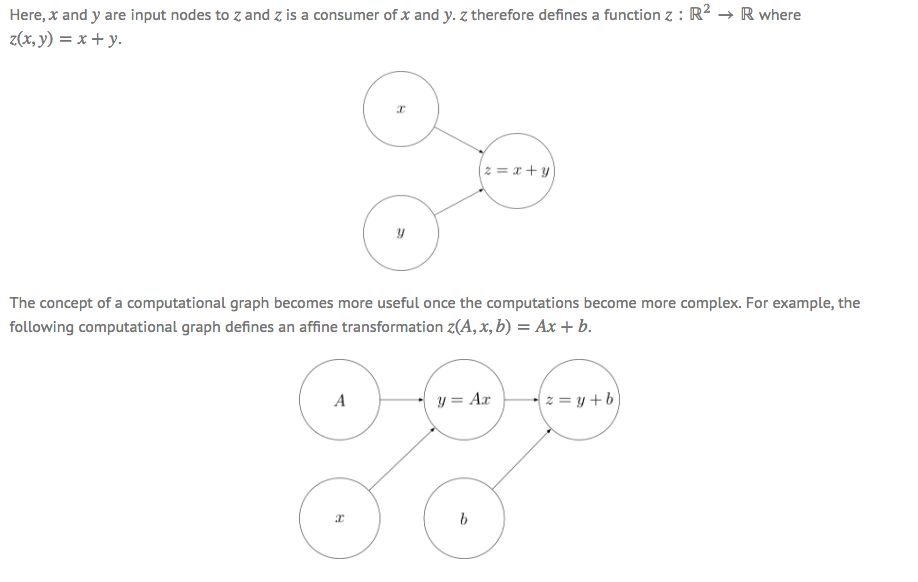

Trigger Warning: Computational graphs and a bit of theory

One of the most common terms heard in AI is neural networks. Neural networks are a type of computational graph. A computational graph is a directed graph where operations or variables can be nodes that feed into each other. Every node in the graph can, therefore, define a function of the variables. Most deep learning models, AI, and neural networks, are just math models. We can use computational graphs to represent and implement different maths models.

If you have some memory of linear algebra and calculus, you should check out this great tutorial called Deep Learning from Scratch.

What do developers need to do to use TensorFlow?

TensorFlow was created with processing power limitations in mind (check TensorFlow Lite and TensorFlow Mobile), making it easier for mobile and web developers to make use of the library and create AI-powered features for consumer products. Developers with a basic background in neural networks can use the framework for data sets, estimators, training and inference.

Developers with no background in neural networks may want to start with a higher-level neural network API, such as Keras.io. Written in Python, Keras is capable of running on top of TensorFlow, CNTK, and Theano and is good for easy and fast prototyping.

To start with TensorFlow, a developer needs to know:

- Python or C++

- A little bit about

arrays(specifically thenumpy.array, which is provided by Numpy, a numerical computation library for Python, that Tensorflow uses to deal with an array/matrix)

3 Common Applications for TensorFlow

The many modules included in the TensorFlow librarycan perform a variety of functions known as AI/ML. Below are three commonly used modules:

- Convolutional Neural Networks (CNN) for image recognition and processing

- Sequence-to-Sequence (Seq2Seq) models for human language-related features

- Large-scale linear models for data analysis and simple behavioural predictions

Below, we’ll list out some examples for commercial and consumer applications:

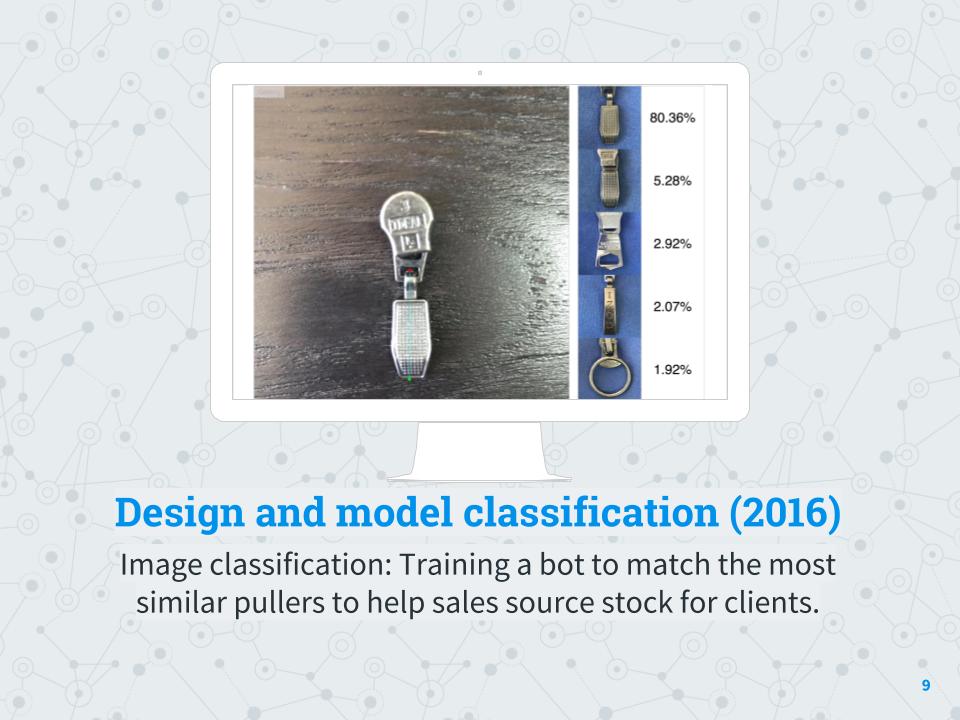

Convolutional Neural networks (CNN) and Image Recognition

One of the many applications for CNNs is to help machines recognize images and make them meaningful in a way that is intuitive to humans. For example, this means having a computer recognizing the coloured dots in an image combine to become a cake. In order to check accuracy, an AI can be trained on a training data where correct answers are already available. The CNN will learn to identify an image, check if it is correct, and improve its ability to make sense of the coloured clusters. But a true performance is giving AI a new dataset to see how it performs.

Transfer learning is a shortcut technique that takes a fully-trained AI model and repurpose it for new image classification rather than retraining an AI model from scratch every time. ImageNet is a good resource for providing an image training data set.

CNN can be applied in a range of fields such as:

- image classification for business applications like our client case of matching and sorting inventory

- medical diagnostics to match patient image data with known visual symptoms for medical conditions

- line sensors for sports for judging in-out based on high resolution image data

- risk assessment for applications (such as for insurance or a credit card)

The benefits of starting early with AI/ML is that early adopters will have larger datasets to work with than competitors years from now.

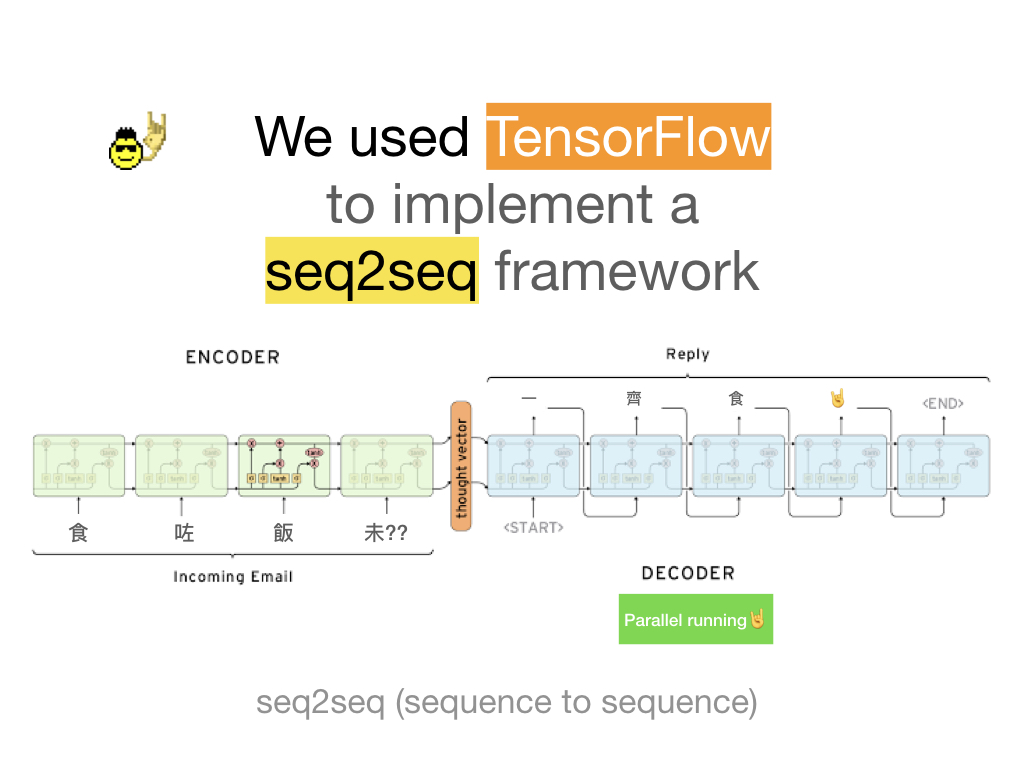

Sequence-to-Sequence models and human language

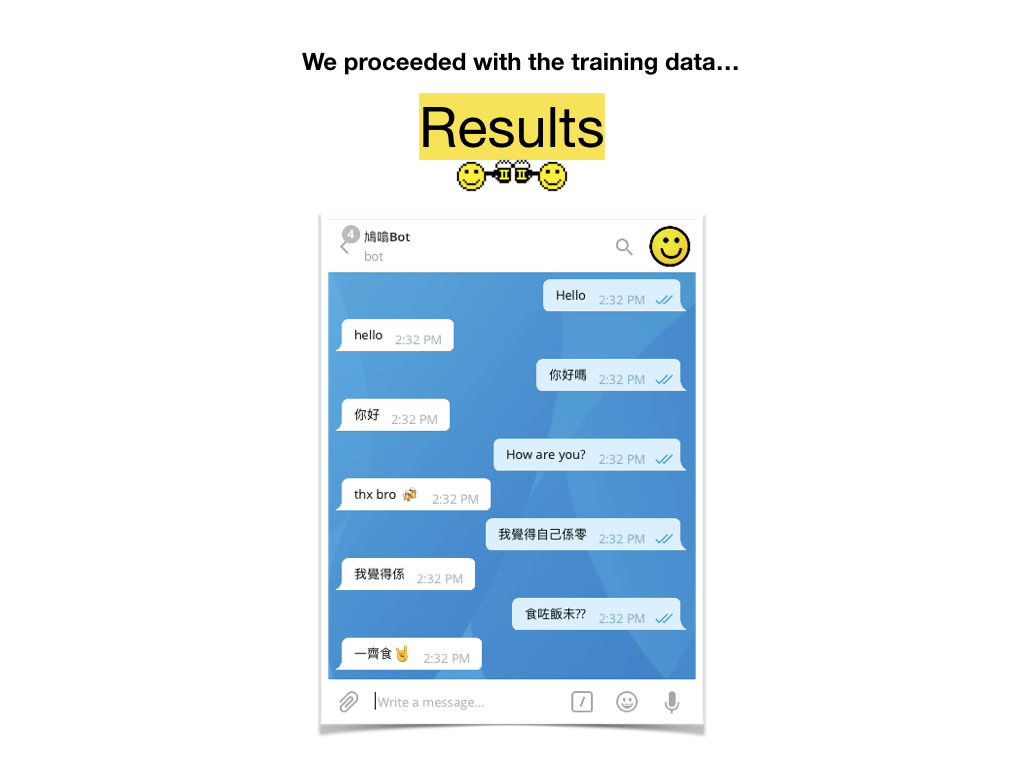

Sequence-to-Sequence (Seq2Seq) models use recurrent neural networks as a building block by feeding lots of sentence pairs during model training so that we can generate one sentence from another sentence. These sentence pairs can be anything. For example, when it is words from two different languages, the model can be used for translations. When it is a pair of conversational messages, the model can be used for chat bots.

One interesting point is that we just need to feed in a raw word sequence and we can get back a word sequence output with mostly correct grammar (not perfect, but at least understandable). This means that seq2seq models can learn the language model from the training sample and implicitly learn it during training, which usually is not the case in traditional NLP where the language model needs to be explicitly taught.

Language modeling (in short, the probability a word is going to appear given previous sequence of words) is key to many interesting problems such as:

- Speech recognition for real-time subtitling

- AI chatbots, such as our Cantonese Chatbot for Telegram

- Virtual assistants like Apple’s Siri, Amazon’s Alexa, and the Google Assistant

- Machine translation

- Summarize documents

- Batch image captioning

Large-Scale Linear models

Large-scale linear models are more simple than complex neural networks with many layers. But going on the principle of using the right tool for the right job, linear models are still powerful when applied for the appropriate cases. Compared to deep neural nets, linear models train more quickly, can work well on large feature sets, and be interpreted and debugged more easily. As a general approach, linear models are effective for data crunching and making simple predictions to help businesses with large datasets.

Linear models can be used for binary classification (predicting a or b outcome), multiclass classification (predicting one of multiple outcomes) and regression (predicting a numeric value). These models can be used for evaluating big data, such as census data or financial data.

As an example, Amazon ML uses linear models in ecommerce to help make suggestions for sellers such as:

- Will the customer buy this product or not buy this product? (Binary Classification)

- Is this product a computer, smartphone, or accessory? (Multiclass Classification)

- What is an appropriate price for this car model and vintage? (Regression)

Of course, we’ve just scraped the surface of what AI/ML can do. If you would like to learn more about how AI-powered features can be incorporated into your product, get in touch.